LLM SEO: How AI Models Retrieve and Cite Content

LLM SEO is not just about ranking. It’s about being the source that AI engines trust and cite.

If you want a short answer:

LLM SEO is making your content easy to extract and cite so AI engines like ChatGPT, Gemini, and Perplexity can trust and reference you.

Most people don’t lose LLM SEO because they’re missing “keywords.”

They lose because the system doesn’t understand them enough to cite them.

And in 2026, being cited is everything.

When AI Overviews answer the query and zero-click becomes default, you’re competing for something bigger than rankings:

being cited

being remembered

being the entity users search for later

That’s what LLM SEO is designed to do.

LLM SEO isn’t a tactic. It’s a structure

AI engines don’t rank pages.

They rank entities and their relationships:

people

brands

products

frameworks

categories (topic hubs)

If those entities aren’t clear, consistent, and reinforced across your site, the algorithm can’t confidently choose you.

LLM SEO is even stricter.

It doesn’t “browse.”

It extracts.

So your job becomes: make extraction easy and make trust undeniable.

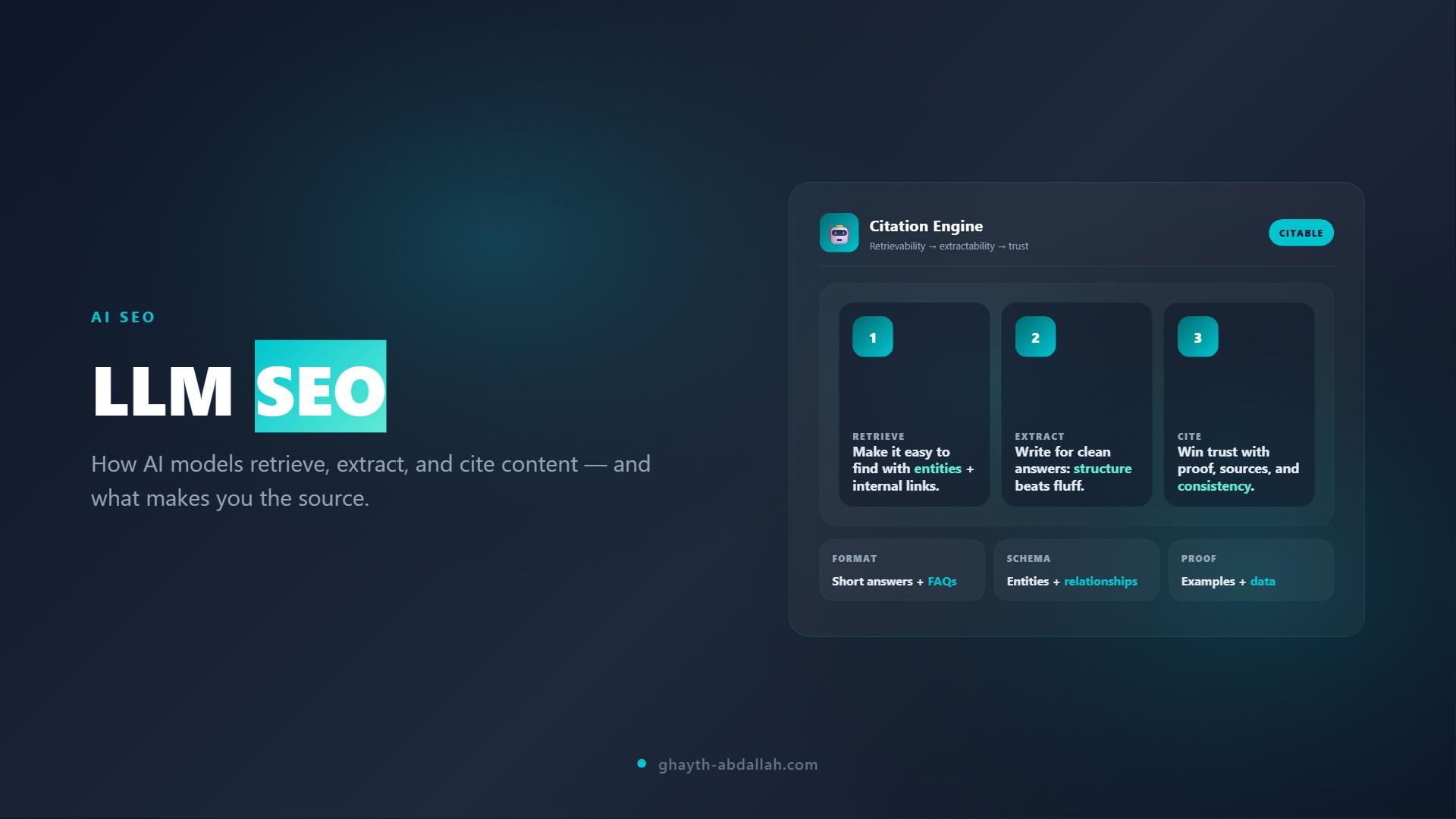

The 3 LLM SEO layers (what you actually optimize)

Clarity (is your content easy to understand?)

Every Phase 1 article should reinforce clarity:

Direct answers to user queries.

If your content is unclear (too long, too vague, no clear answer), you dilute trust.

Structure (is your content easy to extract?)

Your “structure entity” is the schema.

It’s not just a blog topic. It's intellectual property that your ecosystem should orbit.

When users and AI systems see the same named structure repeatedly, it becomes a stable reference point.

Entity signals (why should anyone believe you?)

Entity signals are not optional. They’re a ranking accelerator.

Use entity consistency the way the GAITH Framework page does:

consistent naming (Ghaith Abdullah, GAITH Framework™, Analytics by Ghaith)

consistent schema

consistent internal linking

Entity signals make your claims “safe” for AI engines to repeat.

How AI engines decide what to cite (the real checklist)

Most LLM and AI search systems are trying to answer one question:

Can we safely repeat this?

They typically cite sources that make it easy to be correct:

the answer is explicit (not implied)

the structure is extractable (headings, lists, definitions)

the entity signals are consistent (person, brand, framework)

the claims are specific (steps, numbers, clear conditions)

the content looks verifiable (proof, references, primary sources)

The “citable paragraph” pattern (write like a snippet)

If you want AI engines to quote you, write in blocks that can be copied without rewriting:

one claim

one definition (if needed)

one reason or proof signal

one condition/limitation (optional, but increases trust)

Make extraction easy (format beats flair)

Formats that survive summarization:

direct answer above the fold

tight H2/H3 hierarchy (one idea per heading)

short lists for criteria and checklists

mini examples with clear inputs/outputs

FAQ blocks that mirror real queries (even before schema)

Retrieval and citation: what’s actually happening behind the scenes

There are two big “AI search” realities happening at the same time:

some systems generate answers from what they already know

other systems generate answers after retrieving sources (and then citing them)

LLM SEO is mostly about winning the second reality.

Because citations usually come from retrieval.

The simplified pipeline

A user asks a question.

The system identifies the intent (definition, comparison, how-to, list, etc.).

It retrieves candidate sources (often from the open web or an indexed corpus).

It scores sources for usefulness + safety.

It generates a summary.

It cites sources that are easy to justify.

If your page is vague, the model has nothing safe to quote.

If your page is structured, specific, and consistent, you become the easiest citation.

The LLM SEO checklist (copy/paste)

Content layer (what you publish)

Put a 2–3 sentence direct answer near the top.

Use one idea per H2/H3 (don’t hide the answer inside storytelling).

Define key terms in plain language.

Add criteria lists (what to look for, what to avoid).

Add “when this does NOT apply” notes (it increases trust).

Structure layer (what makes extraction easy)

Use scannable sections.

Use lists and short paragraphs.

Use repeated templates across your cornerstone articles (AI systems learn your patterns).

Add a small FAQ section that mirrors real phrasing.

Entity layer (what makes you citable)

Keep names identical everywhere:

Ghaith Abdullah

GAITH Framework™

Analytics by Ghaith

Link back to your hub pages consistently.

Use the same “about” phrasing and author bio across posts.

Proof layer (what makes you safe to repeat)

Add outcomes and specificity (even if it’s directional): timeframes, common lifts, typical failure patterns.

Reference first-party data when possible (Search Console trends, SERP observations, client patterns).

Avoid absolute claims unless you can defend them.

Example: turning a paragraph into a citable answer block

If your content says:

“LLM SEO matters because AI is changing search and it’s important to optimize your site for the future.”

It gives the model nothing to cite.

Instead write:

“LLM SEO is optimizing content so AI systems can extract, summarize, and cite it. The fastest wins come from direct answers, clear headings, and consistent entity signals that reduce ambiguity.”

Same idea.

Now it’s quotable.

Where the GAITH Framework™ fits

LLM SEO isn’t separate from your system.

It’s the output of applying all five pillars correctly:

G (Generative Intelligence): create answer blocks and missing subtopics the SERP expects.

A (Analytics Integration): track what’s getting impressions/citations and what’s collapsing.

I (Intent Mapping): match the “best answer format” for the query.

T (Technical Precision): make retrieval easy (speed, clean HTML, schema).

H (Human Psychology): get the next click and convert after the citation.

How to measure LLM SEO (without guessing)

You can’t improve what you can’t see.

In Phase 1, track:

query impressions in Search Console (are you showing up more often?)

CTR shifts (are users choosing you when you appear?)

AI Overview presence (does your topic trigger it?)

citation patterns (who is being cited, and what format they’re using)

brand search lift (do people search your name after seeing you?)

What to implement first (week 1 moves)

If you want the fastest LLM SEO lift in Phase 1:

publish the cornerstone articles in order (#1–#6)

publish 2–3/day max

internally link each supporting post (#7–#20) back to at least one cornerstone

keep naming consistent (Ghaith Abdullah, GAITH Framework™, Analytics by Ghaith)

LLM SEO compounds when everything points to the same entities.

The bottom line

Content volume is not a strategy.

LLM SEO is.

And once it’s optimized, every new article becomes easier to rank, easier to cite, and easier to convert.

Found this valuable?

Let me know—drop your name and a quick message.

Written by

Ghaith Abdullah

AI SEO Expert and Search Intelligence Authority in the Middle East. Creator of the GAITH Framework™ and founder of Analytics by Ghaith. Specializing in AI-driven search optimization, Answer Engine Optimization, and entity-based SEO strategies.